Continuous Integration for AWS Lambda using Bitbucket

Using Bitbucket, the Serverless Framework, Git Submodules, Bitbucket Pipelines, AWS Lambda, Node.js, and Native Bindings

This post will explain how to set up a fully automated continuous integration/continuous deployment system for a node.js AWS Lambda Serverless service. This is the best way of deploying to AWS lambda if the code you’re using needs a native package (such as node-sqlite3 or better-sqlite3), because you’ll build your package on roughly the same operating system that powers AWS Lambda. However, this method is good to use for any AWS Lambda Serverless service.

This will be an *intermediate - advanced* level. This assumes your code is already functioning and you can deploy it with the Serverless framework and I won’t dive too deep into many extraneous topics, preferring a focused approach. But first, some introduction.

What is Continuous Integration and Continuous Delivery

Continuous integration happens when code changes that you (and others on your team) make are combined into a shared branch in source control. This is generally accompanied by an automated build to ensure that the new code doesn’t break functionality. At my work we have over a dozen people continuously committing code, and it is essential to ensure that we catch broken code as soon as possible.

Continuous delivery is the next piece of a smooth DevOps practice. After the code is checked in and built, continuous delivery takes that code and delivers it to its final destination (usually production code) as soon as possible after verifying that nothing is broken and functionality is in place. Continuous delivery requires extreme trust in both the method of delivery of code (what we usually call the pipeline), and the automated tests that are run to ensure functionality.

Continuous delivery is extremely risky without a comprehensive suite of automated tests, but it becomes much less risky than traditional deployment techniques once accomplished correctly. Why? Because if you are constantly releasing well-tested code you minimize the surface area of the application that you change each time.

What are Native Bindings/Native Libraries?

Unfortunately everything that you want to do on a computer isn’t written in the language that the rest of your application uses. For example, lots of image processing tools aren’t written in Javascript because they require performance beyond what Javascript can provide. In our case we want to use SQLite to distribute some pre-packaged data that our AWS Lambda function can use. The popular JavaScript SQLite libraries simply provide a wrapper around a pre-compiled binary, and unfortunately, binaries have to be compiled on the system they will be run on. I develop on a Mac, and AWS Lambda uses a certain flavor of Linux, so I can’t npm install node-sqlite3 on my Mac and zip it up and expect it to work on Lambda.

Why Git Submodules?

In my case I had a shared set of code that I’d written that I wanted to be a shared library across multiple other git repositories. The easiest way was to use submodules to include the shared library as a subdirectory in my Lambda project. However, submodules mean that you have to do some additional set up in your continuous integration pipeline. Since I went to a lot of trouble to figure it out, I’ll leave it in to hopefully help somebody else.

Technologies Used

Code Repository. Bitbucket. They have free unlimited private repositories.

Pipeline Provider. Bitbucket Pipelines. Naturally it integrates well with Bitbucket repositories, and they have a free tier. You configure it by writing YAML in a configuration file, declaring the infrastructure you need to build your application in an “infrastructure-as-code” approach. At work we use VSTS by Microsoft, which is actually a pretty nice solution. AWS has CodeBuild and CodePipeline, but it’s weak sauce, buggy, and has a clunky interface. Maybe in a couple months they’ll beef it up a bit and we’ll re-evaluate.

AWS Lambda framework. The Serverless Framework. A great framework that lets you easily deploy and develop AWS Lambda functions.

Package Manager. Yarn. Faster than NPM, but feel free to use NPM if you want, it doesn’t matter.

Language. Javascript with the Node.js 6.10 runtime. The principle here will work for other languages that AWS supports but this post will show Node.js examples.

Native bindings example library. better-sqlite3. It’s faster than node-sqlite3 and has a synchronous API, which is better for my case. My AWS Lambda Serverless Service is going to respond to an Alexa skill request, just one Alexa skill request, and Alexa is going to have to wait for the Lambda to finish, so it’s faster and easier to get rid of the additional complexity that the asynchronous style adds.

Steps

OK so here’s how to do it.

- Put your shared code into a Bitbucket repository.

- Put your Serverless service code, which has the shared code as a submodule, into another Bitbucket repository.

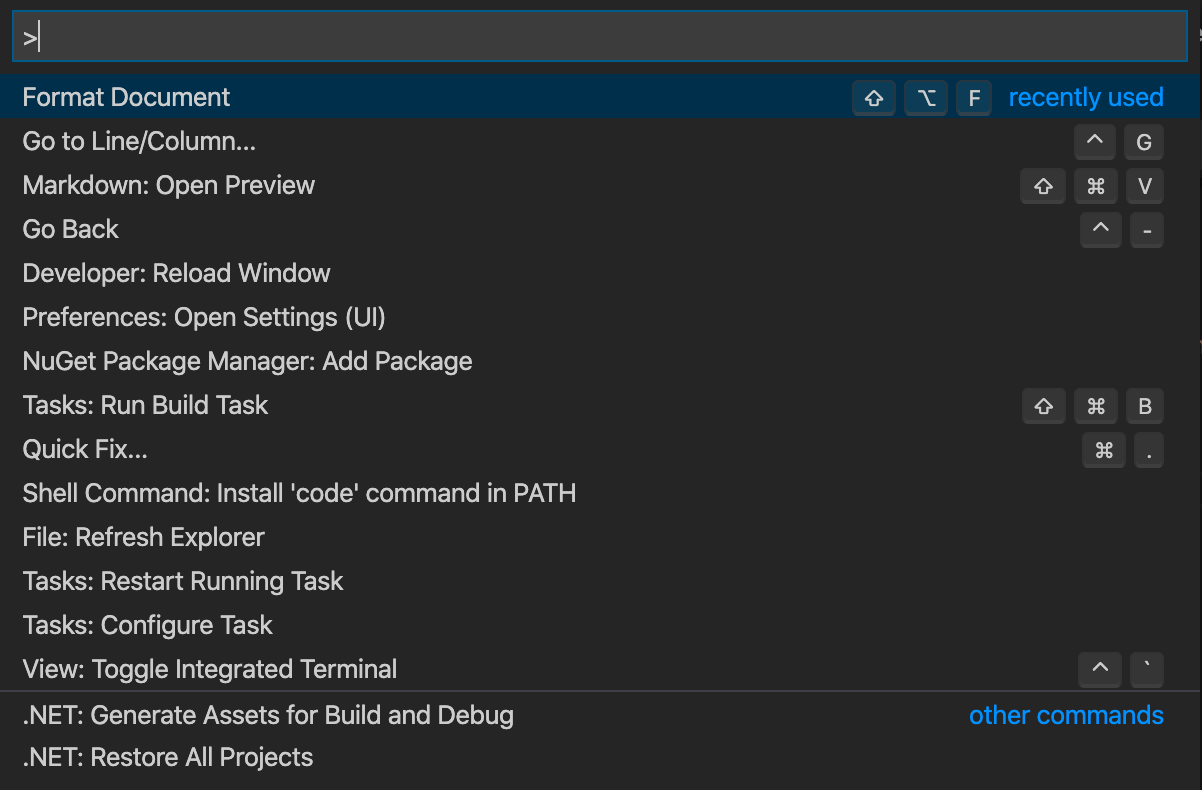

- Turn on Bitbucket Pipelines for your Serverless service repository. They will walk you through a wizard to add a

bitbucket-pipelines.ymlfile to your repository. You can select the Node.js template. - Configure your

bitbucket-pipelines.ymlfile.

This is where things get tricky. Pipelines uses Docker images to build your code, so we need to select a good image where any code that needs to be compiled (think native bindings) will be compatible with AWS Lambda. In my testing the default node image is fine, but I went and found another image that purports to be an exact clone of the AWS Lambda environment called docker-lambda. That’s better, right? The Docker image name is just lambci/lambda, and we’ll tag on a particular version of it suitable for building things (in this case, we want to use Node.js 6.10 but there are other versions).

Without further ado, here is the bitbucket-pipelines.yml file:

# This is the Docker image

image: lambci/lambda:build-nodejs6.10

pipelines:

branches:

# only execute these steps when checking into the "master" branch

master:

# Only one step is supported by Pipelines right now

- step:

script:

# Configure submodules to point to the correct endpoint.

# This essentially rewrites your git submodule configuration to use the app password you'll generate

# Variables starting with $ are automatically replaced at build time by Pipelines

- git config --file=.gitmodules submodule.$SHAREDLIBRARYSUBMODULENAME.url https://$CIUSERNAME:$CIPASSWORD@bitbucket.org/$CIUSERNAME/$SHAREDLIBRARYSUBMODULENAME.git

# Restore submodules

- git submodule update --init --recursive

# Install yarn

- curl -o- -L https://yarnpkg.com/install.sh | bash -s -- --version 0.18.1

- export PATH=$HOME/.yarn/bin:$PATH

# Install project dependencies

- yarn

# Or you can use NPM

# - npm set progress=false

# - npm install

# Run tests

- npm test

# Add serverless CLI to environment

- yarn global add serverless

# Deploy to dev stage

- sls deploy -s dev

- Grab an app password from your Bitbucket account. This will allow the build machine to checkout the submodule code.

- Set your app password as a Pipelines environment variable. I set mine on the account level and called mine

CIPASSWORD. I also addedCIUSERNAMEfor my Bitbucket username. - Set up a new IAM user in the AWS console to represent the CI pipeline. Generate a new access token and set it as an environment variable in Pipelines. For these I did them at the repository level, and if you call them

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEYthen the Serverless framework (or anything else that uses the default AWS CLI tool) will pick them up automatically. Nice! - Set the environment variable

SHAREDLIBRARYSUBMODULENAMEto the name of your shared submodule.

Now check in some code to the master branch (like the new bitbucket-pipelines.yml file) and Pipelines will automatically kick off a new build.

Troubleshooting

Make sure that your serverless service is configured with the same runtime you’re building on. I was having tons of issues until I realized that I just needed to update my serverless.yml from:

[...]

provider:

name: aws

runtime: nodejs4.3

[...]

to

[...]

provider:

name: aws

runtime: nodejs6.10

[...]

Let’s review

So what have we set up?

- Every time new code is checked into the

masterbranch it kicks off a Pipelines build in the cloud. - The source code is downloaded to the AWS Lambda-compatible virtual machine and all the dependencies are installed. If any dependencies (like

better-sqlite3) need to be compiled, they are compiled to a binary that works with AWS Lambda. - Automated tests are run.

- If the tests pass, the code is deployed to AWS Lambda.

Success!! Let me know if you run into any problems or if these steps worked for you in the comments.

Twitter

Facebook

Reddit

LinkedIn

StumbleUpon

Email